Open data is great because it’s open. But not all data is meant to be open. A lot of it is “sensitive.” What could be “open data” is often classified as “sensitive data” to protect the personal information and identity of individuals. Sensitive data may be restricted from publication, or it may be cleared for public release – it depends on the context.

Everyone who publishes data wants to know which datasets are suitable for public release, and which ones are subject to various privacy considerations. Sometimes there are definitive answers to that question. The data may be protected under the Health Insurance Portability and Accountability Act (HIPAA), Family Educational Rights and Privacy Act (FERPA), or other federal, state, and local laws and policies. But sometimes there is little or no guidance to help in determining whether data is sensitive.

GovEx gets this question frequently: What data is safe to release?

The truth for data sensitivity is that it, like most things, depends. Federal, state, and local laws require governments to publish anything that is public information (Sunshine Laws, FOIA), as well as setting guidelines for what is private. Not only does it depend on state or federal law, it depends on the subjective opinion of the person in charge of classifying data.

This is usually the role of a Public Information Officer, or a Chief Information Security Officer. The worst fear of a data steward is the inadvertent disclosure of an individual’s private data. (Or is it that the open data wallows in a state of unuse?)

Even if the data steward follows state and local privacy laws, it’s still possible that an individual’s identity may be revealed. Unless it’s deliberate, the inadvertent revelation of private data can happen through the mosaic effect. This occurs when data points from different datasets are systematically combined to reveal (or infer) a person’s identity.

Combatting the Mosaic Effect

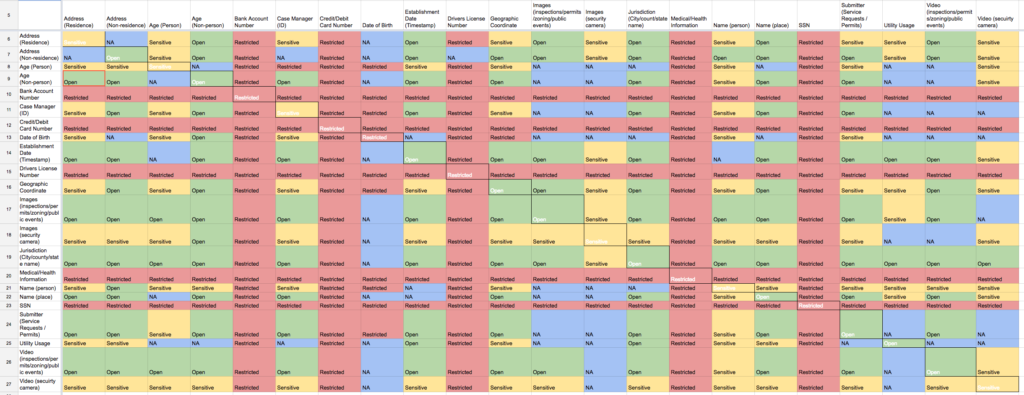

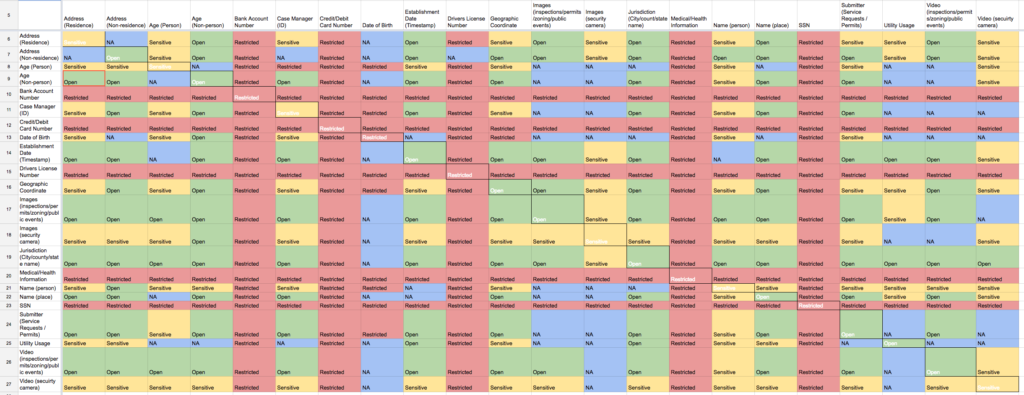

To push this conversation beyond a simple list, we are developing a “Privacy Matrix” to help cities understand the connections between the various data fields in relation to their specific legal obligations.

Screenshot of the GovEx Privacy Matrix.

For example, geolocation data isn’t protected in isolation, but what if it’s combined with a date or criminal offense category? How does its sensitivity change? When does it become private? This matrix is designed to help data practitioners make those connections between data points.

Creating this resource was a challenge. There are thousands of data fields a city might store in its systems and millions of potential combinations between those fields. What is the exact sensitivity of a given combination of data fields? In addition, some combinations do not make sense or are never seen in real world datasets.

Our resource provides one way to interpret the sensitivity levels of 22 data fields, as well as their sensitivity when combined with one another. This gives us a rough sense of the sensitivities when combining one data field with another, but it can’t yet accommodate the combination of three or more data fields to make a determination.

The next logical step is to create a comprehensive tool to calculate sensitivity scores from any given combination of data fields. In addition, we would like to let cities plug in their specific privacy policies or provide other background context to determine sensitivity scores.

We would love to learn about your city’s data privacy needs, and would appreciate any feedback on how this tool can be useful to you.